How do I deploy my Symfony API - Part 1 - Development

This is the first from a series of blog posts on how to deploy a symfony PHP based application to a docker swarm cluster hosted on Amazon EC2 instances. This post focuses on the development environment.

In this blog post I'm going to share the deploy strategy I've used to deploy an API-centric application to AWS for a customer.

This is the first post from a series of posts that will describe the whole deploy process from development to production.

The application was a more-or-less basic API implemented using Symfony 3.3 and few other things as Doctrine ORM and FOS Rest Bundle. Obviously the source code was stored in a GIT repository.

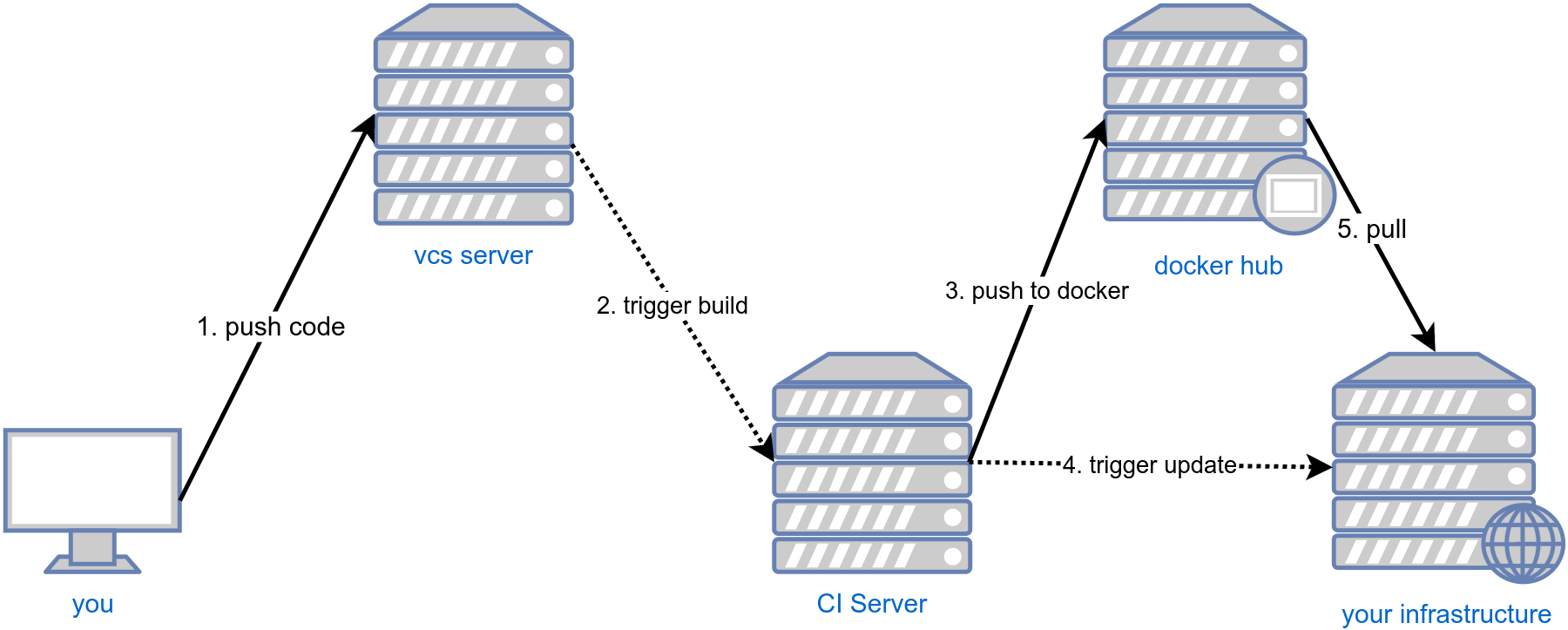

When the project/application was completed, I've used

CircleCI (that offers 1 container for private builds for free) to coordinate the "build and deploy"

process.

The process can be summarized in the following schema:

The repository code was hosted on Github and CircleCI was configured to trigger a build on each commit.

As development flow I've used GitFlow and each commit to

master was triggering a deploy to live, each commit to develop was triggering a deploy to staging

and each commit to feature branches was triggering a deploy to a test environment.

Commit to release branches were triggering a deploy to a pre-live environment.

The deploy to live had a manual confirmation step.

Development environment

Obviously everything starts from the development environment.

As development environment I've used Docker and Docker Compose.

A lot of emphasis was used to ensure a stateless approach in each component. From the docker point of view this meant that was not possible to use shared folders between container and the host to store the state of the application (sessions, logs, ...).

Not storing data on the host is fundamental to be able to deploy seamlessly the application to live. Logs, sessions, uploads, userdata have to be stored in specialized services as log management tools, key value storages, object storages (as AWS S3 or similar).

Docker compose file

Docker compose is a tool that allows you to configure and coordinate containers.

# docker-compose.yml

version: '3.3'

services:

db:

image: goetas/api-pgsql:dev

build: docker/pgsql

ports:

- "5432:5432"

volumes:

- db_data:/var/lib/postgresql/data/pgdata

environment:

PGDATA: /var/lib/postgresql/data/pgdata

POSTGRES_PASSWORD: api

php:

image: goetas/api-php:dev

build:

context: .

dockerfile: docker/php-fpm/Dockerfile

volumes:

- .:/var/www/app

www:

image: goetas/api-nginx:dev

build:

context: .

dockerfile: docker/nginx/Dockerfile

ports:

- "7080:80"

volumes:

- .:/var/www/app

volumes:

db_data: {}

From this docker-compose.yml we can see that we have PHP, Nginx and a PostgreSQL database.

Please pay attention to the compose-version 3.3, means that we are can run this compose file as a

docker stack and deploy it on simple docker hosts

of on docker swarm clusters.

To note also the build section of the php and www services. The images are built from the specified dockerfile,

but the context of build (the working directory) is ..

This allows to build the docker image containing already the source code of our application

but keeping a nice folder structure.

The db container is used only for development and test environments

(where performance and high availability are not necessary), for live was used an Amazon RDS instance.

Docker files

From the previous docker-compose.yml file we can see that we are using "custom" images for each service.

Let's have a look at them.

DB (postgres)

docker/pgsql/Dockerfile

FROM postgres:9.6

COPY bin/init-user-db.sh /docker-entrypoint-initdb.d/init-user-db.sh

docker/pgsql/bin/init-user-db.sh

#!/bin/bash

set -e

psql -v ON_ERROR_STOP=1 --username "$POSTGRES_USER" <<-EOSQL

CREATE USER mydbuser PASSWORD 'XXXXX';

CREATE DATABASE mydb;

GRANT ALL PRIVILEGES ON DATABASE mydb TO mydbuser;

EOSQL

This docker file is trivial, just references the official postgres:9.6 image and creates a database when

spinning up the container.

Data are persisted to the host machine in a docker volume as specified in the volumes section

on the docker-compose.yml file.

EDIT (2017-09-24): Thanks to Michał Mleczko, I've found out that the custom build script is not really necessary since the default postgres images allows to configure the default database creation via environment variables. To have postgres running is sufficient to do something as:

db:

image: postgres:9.6

ports:

- "5432:5432"

volumes:

- db_data:/var/lib/postgresql/data/pgdata

environment:

PGDATA: /var/lib/postgresql/data/pgdata

POSTGRES_USER: mydb # postgres user

POSTGRES_DB: mydb # postgres db name

POSTGRES_PASSWORD: XXX # postgres password

PHP

This is the docker/php-fpm/Dockerfile file to build the PHP image.

# docker/php-fpm/Dockerfile

FROM php:7.1-fpm

RUN usermod -u 1000 www-data

RUN groupmod -g 1000 www-data

# install system basics

RUN apt-get update -qq && apt-get install -y -qq \

libfreetype6-dev \

libjpeg62-turbo-dev \

libmcrypt-dev \

libpng12-dev git \

zlib1g-dev libicu-dev g++ \

wget libpq-dev

# install some php extensions

RUN docker-php-ext-install -j$(nproc) iconv mcrypt bcmath intl zip opcache pdo_pgsql\

&& docker-php-ext-configure gd --with-freetype-dir=/usr/include/ --with-jpeg-dir=/usr/include/ \

&& docker-php-ext-install -j$(nproc) gd \

&& docker-php-ext-enable opcache

# install composer

RUN curl -s https://getcomposer.org/composer.phar > /usr/local/bin/composer \

&& chmod a+x /usr/local/bin/composer

# copy some custom configurations for PHP and FPM

COPY docker/php-fpm/ini/app.ini /usr/local/etc/php/conf.d/app.ini

COPY docker/php-fpm/conf/www.pool.conf /usr/local/etc/php-fpm.dwww.pool.conf

WORKDIR /var/www/app

# copy the source code

COPY composer.* ./

COPY app app

COPY bin bin

COPY src src

COPY web/index.php web/index.php

COPY var var

COPY vendor vendor

# set up correct permissions to run the next composer commands

RUN if [ -e vendor/composer ]; then chown -R www-data.www-data vendor/composer vendor/*.php app/config web var; fi

# generate the autoloaders and run composer scripts

USER www-data

RUN if [ -e vendor/composer ]; then composer dump-autoload --optimize --no-dev --no-interaction \

&& APP_ENV=prod composer run-script post-install-cmd --no-interaction; fi

This is the PHP image, not much to say except the permission issue that was necessary since the COPY operation is

always executed as root. Executing the chown command was necessary for the folders that will be touched by the

composer dump-autoload and composer run-script post-install-cmd.

WWW (nginx)

# docker/nginx/Dockerfile

FROM nginx:stable

# copy some custom configurations

COPY docker/nginx/conf /conf

# copy the source code

COPY web /var/www/app/web

The web server image is also trivial, it just copies some configurations (the virtual host setup mainly) and

copies the code available in the web directory (the only one exposed to the server by symfony recommendations).

Running

By typing in your shell...

docker-compose up -d

the development environment is up and ready!

Tips

Here are two tips on how manage some aspects of your application

Environment variables

The exposed setup is sufficient but to create a more flexible image, for me was fundamental to allow to use environment variables in configuration files.

To do so I've used a helper script to replace environment variables from a file with their values.

The following file was copied on each image built via Dockerfile to /conf/substitute-env-vars.sh.

#!/usr/bin/perl -p

s/\$\{([^:}]+)(:([^}]+))?\}/defined $ENV{$1} ? $ENV{$1} : (defined $3 ? "$3": "\${$1}")/eg

All configuration files were copied always to /conf.

Later the docker-ENTRYPOINT was responsible to replace the environment variables from a file with their runtime values

and to place the files the right location.

This is a portion of the entrypoint file:

#!/bin/bash

set -e

/conf/substitute-env-vars.sh < /conf/app.ini > /usr/local/etc/php/conf.d/app.ini

/conf/substitute-env-vars.sh < /conf/nginx.conf > /etc/nginx/conf.d/www.conf

## here the rest of your entrypoint ...

With this script we are able to use environment variables in each configuration file. Was possible to specify different host names for the nginx containers without rebuilding the images but by just setting up appropriate environment variables.

Let's consider the following nginx configuration file:

server {

server_name ${APP_SERVER_NAME:project.dev};

root ${APP_SERVER_ROOT:/var/www/app/web};

}

$APP_SERVER_NAME and $APP_SERVER_ROOT are environment variables and when set to www.example.com and /home/me/www

the resulting file will be:

server {

server_name www.example.com;

root /home/me/www;

}

If some variables are not available, they will be replaced by its default value

(project.dev and /var/www/app/web in this case).

If the variable is not defined and no default value is specified, the result will be an empty string.

Logs

By default, Symfony stores logs to file, using docker, in some situations can be not the best approach.

I've configured monolog to output logs directly to the "standard error" stream. This later will allow to configure docker and docker log drivers to store logs via one of the supported drivers (json-file, syslog, gelf, awslogs, splunk... and many other).

Here the config_prod.yml snippet.

monolog:

handlers:

main:

type: fingers_crossed

action_level: error

handler: nested

buffer_size: 5000 # log up to 5000 items

excluded_404s: # do not consider 404 as errors

- ^.*

nested:

type: stream

path: "php://stderr"

level: debug

Note the buffer_size option, if you have long running scripts (cli daemons),

that option is fundamental to avoid memory leaks.

Symfony by default will store logs forever waiting for an error critical enough to flush the log buffer, but a "good" should not have errors, so the buffer will never be flushed. With that option symfony will keep only the last 5000 log items.

Conclusion

In this post I've shared my local environment. There are many things that can be improved, many of them were fundamental for my project but for brevity I could not include them in this post.

Some improvements that can be done:

- Reducing image size by deleting temporary files after the installation of libraries is done

- Removing composer

- ?

In the next posts will see how to move the local development environments thought the build pipeline to the live environment.

Did I miss something? Feedback are welcome.